API Calls from Inside VRC

Can I see it Work?

Check out the demo world:

Just Give Me Code (Ok!)

- Create Videos of your data:

2. Decode the data and use it in your world:

What's going on here?

VRC is famously locked down. There might be good reasons for this, but the internet is made of connections between people and systems, and it's frustrating not to be able to take advantage of the last 30 years of web development.

Can we do anything about this? We need Input and Output - The one thing we can request from inside VRC are videos, which means we can make GET API calls (since these are simply URLs)*. Can we get data back? We can if the data is in the form of a video! But who would do such a thing...

* There are restrictions, see "what are the restrictions" below.

Step 1:

We create a web service which takes a URL GET request to any API and returns the results as an MP4 video which can be accessed from within VRC. The basic idea is that most web APIs return data in the form of JSON, which looks like this:

{"name":"anfox", "species":"fox"}

Convert it to base64, which looks like this:

eyJuYW1lIjoiYW5mb3giLCAic3BlY2llcyI6ImZveCJ9

Base64 is a neat "old school" scheme for representing data. It's not efficient, but at this point we have our data encoded using a set of just 64 symbols. With that it's possible to represent each value as an equivalent decimal number, and we can use that to indicate a color, or a property of a color (you could use it on just one of the RGB channels for instance, trebling your storage capacity). But let's say we just use it for a color directly ( 0 = A = black, 1 = B = a little less black, etc...).

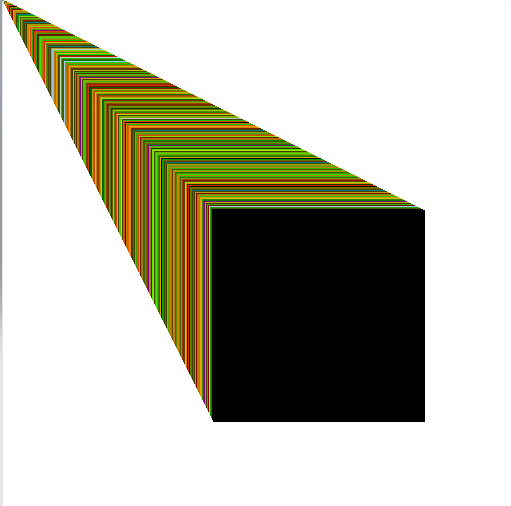

The above base64 string encoded as colored pixels looks like this:

It turns out that video compression causes colors to shift, and this shift isn't predictable (it can even change frame by frame or in different spots in the frame), but we can use a different encoding scheme. Instead of using colors, let's convert each of the digits to binary and encode these using black and white pixels. With that (see the table above), E = 4 = 000100, which becomes black-black-black-white-black-black, and so on.

One trouble with this is that binary representation is even less space-efficient than color representation, as you can see below (we need more pixels to represent the same amount of data), but it still works fairly well for smallest chunks of data. In this 128x128 image, we still have a lot of room for more information.

Step 2:

We reverse the above process. We make a call to the video, which pulls it into the VRC world. We can then use U# to frame-grab the video, decode it to binary, convert the binary to base64, then convert that to JSON and then finally we can use that in our own code! (Well.. sort of... see "does this work" below).

Does this work?

Yes! Well.. Almost! VRC can't run C# sharp scripts the way Unity can, it's limited to a special subset running on a closed VM called Udon sharp or U#. At the moment, we are not aware of anyone who has written a JSON parser (and in fact, the C# JSON parser for Unity has been weirdly broken for 10 years anyway). Foorack has written an XML parser in U# though! So we switched to encoding the data as XML. As you can see below, this is even LESS space efficient than JSON in binary, but it's still workable.

Finally there are some weirdnesses (see below) which prevent this from being a full-blown API solution, but it should open up new possibilities.

What's next?

There are many improvements possible, but one of the things we're working on is increasing information density by time. That is: what we've done is a single frame video, but you can transmit a whole lot more data if you use multiple frames. Imagine a database as a feature film :D

Can I use this? What are the restrictions?

- This code is "legal." It does not "hack" VRC, it is not a mod and as far as we're aware it doesn't violate any terms of service, it's just an exceedingly elaborate "who would ever do that" workaround.

- The biggest restriction we've found is that you cannot programmatically create URLs from U#. If a user doesn't type the URL directly, you are limited to specific URLs which you pre-define in code. This limits the ability to use this in a dynamic way (IE you can't spontaneously pass parameters to an API). However, you can do some UI tricks (see Roliga's demo) to "hide" the first part of a URL, for a search box for instance.

- Because of the above restriction, it's not really possible to pass any identifying information about who is making the API call. On the pixelproxy side you should be able to see the user's IP though, and in a public world it might be possible to cross-reference this with the VRC API that gets world info.

- As with media calls, you are limited to 1 call every 5 seconds and these can be throttled by the world without warning. There are no progress callbacks. This means this solution is less than ideal for a highly responsive UI, but it's fine for some basic "hail mary" data retrieval.

- It's not possible to get detailed error information, any headers, or send a POST request, so you are limited to REST APIs that take GET requests.

If you read this far, thank you :D It's been a journey.

We would be totally delighted to hear from you if you find this useful!